Architecture

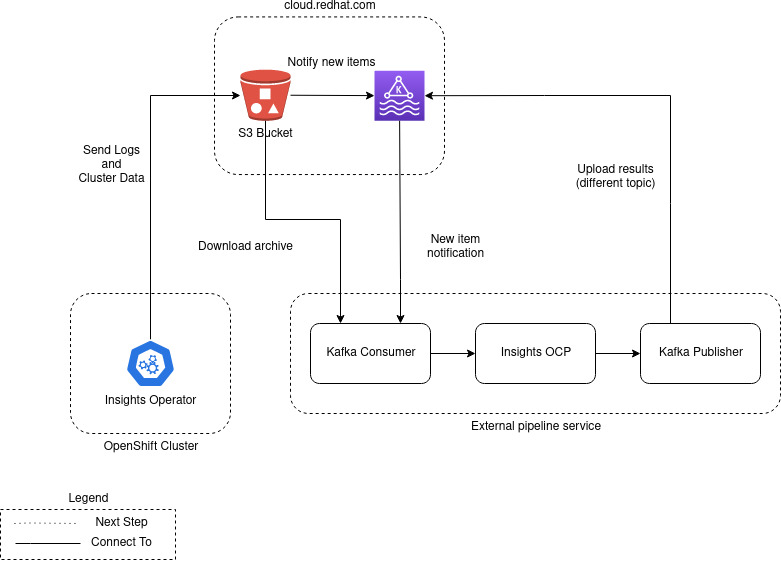

This service is built on top of insights-core-messaging framework and will be deployed and run inside cloud.redhat.com.

External data pipeline diagram

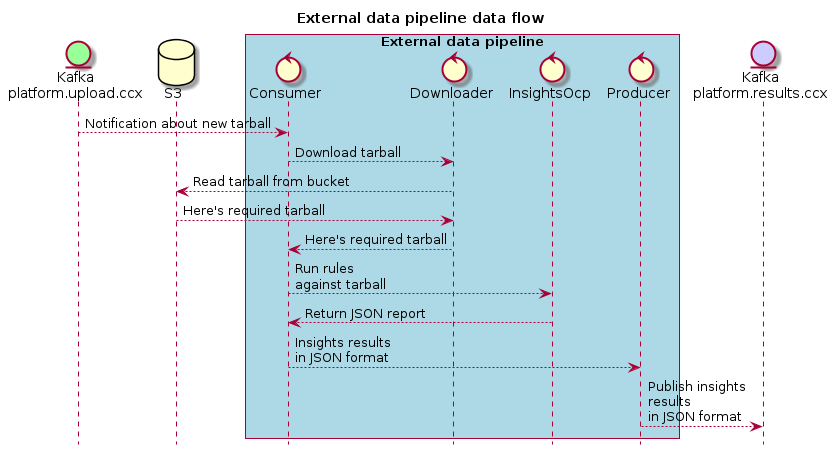

Sequence diagram

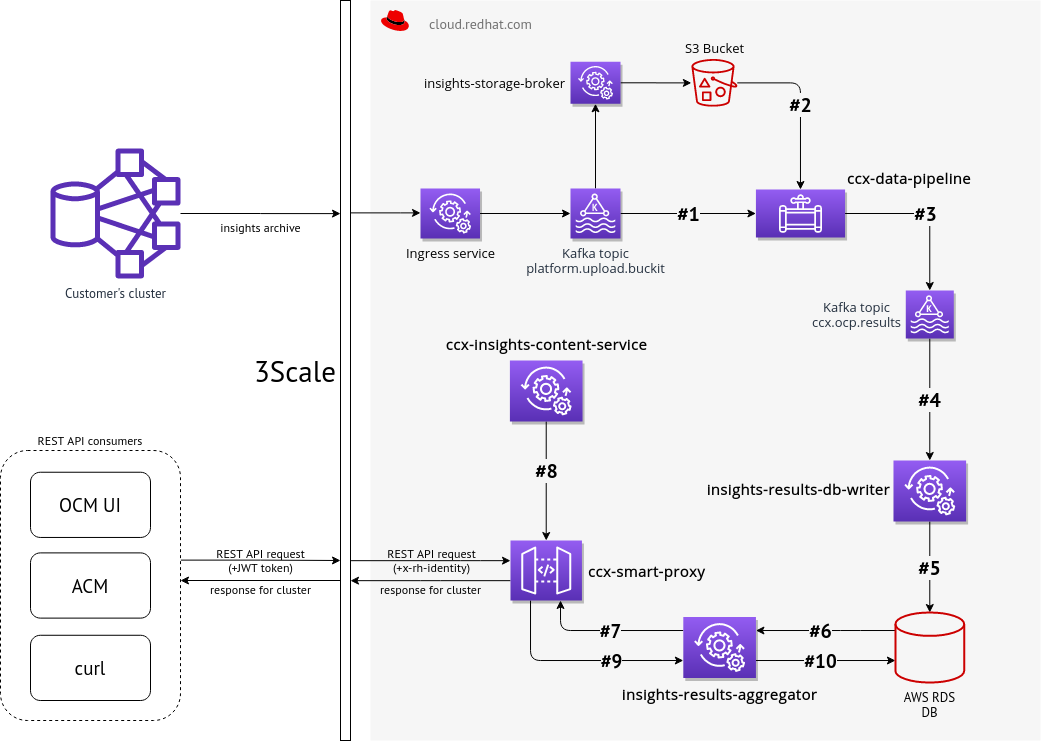

Whole data flow

- Event about new data from insights operator is consumed from Kafka. That event contains (among other things) URL to S3 Bucket

- If processing duration is configured, the service tries to process (see next steps) the event under the given amount of seconds

- Insights operator data is read from S3 Bucket and insights rules are applied to that data

- Results (basically organization ID + cluster name + insights results JSON) are stored back into Kafka, but into different topic

- That results are consumed by Insights rules aggregator service that caches them

- The service provides such data via REST API to other tools, like OpenShift Cluster Manager web UI, OpenShift console, etc.